Understand Spark hierarchy in term of hardware and software design will help you better in develop an optimized Spark application.

Hardware hierarchy

Main components

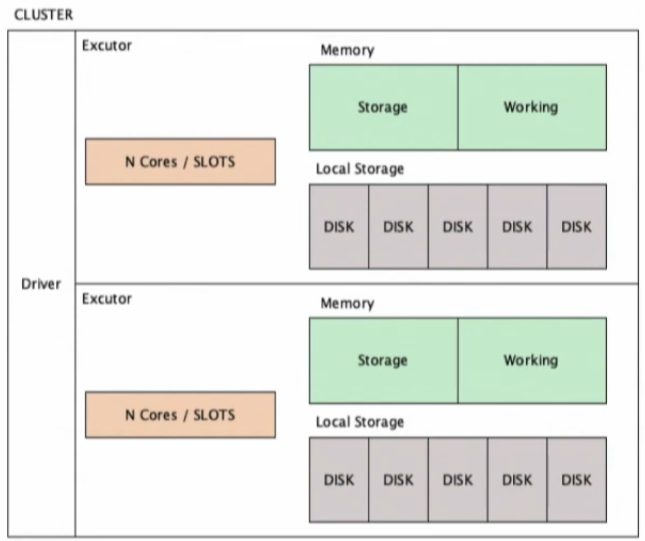

Once Spark application is deployed to a cluster, it requires one node to host driver (master) and one/more nodes aka. executors (slave) to process the tasks. The node hosting driver is just tiny server to orchestrate the tasks running on executors where mostly get focused in term of resources (RAM, Cores, Disk) and performance optimized. Therefore, the following notes present the main hardware components constructed to boot up an executor.

- Cores: A core can be considered a slot that can be used to put workload into. Each core can take one piece of work.

- Memory: by default, 90% of server’s memory is given to Spark. At a high level, this memory is divided into Storage and Working Memory.

- Storage: cache and persist data

- Working: perform in-memory computation

- Disks: can be HDD/SSD/NFS Drives where data persisted from memory during shuffling. Better disks would ensure fast shuffling of data.

Data speed

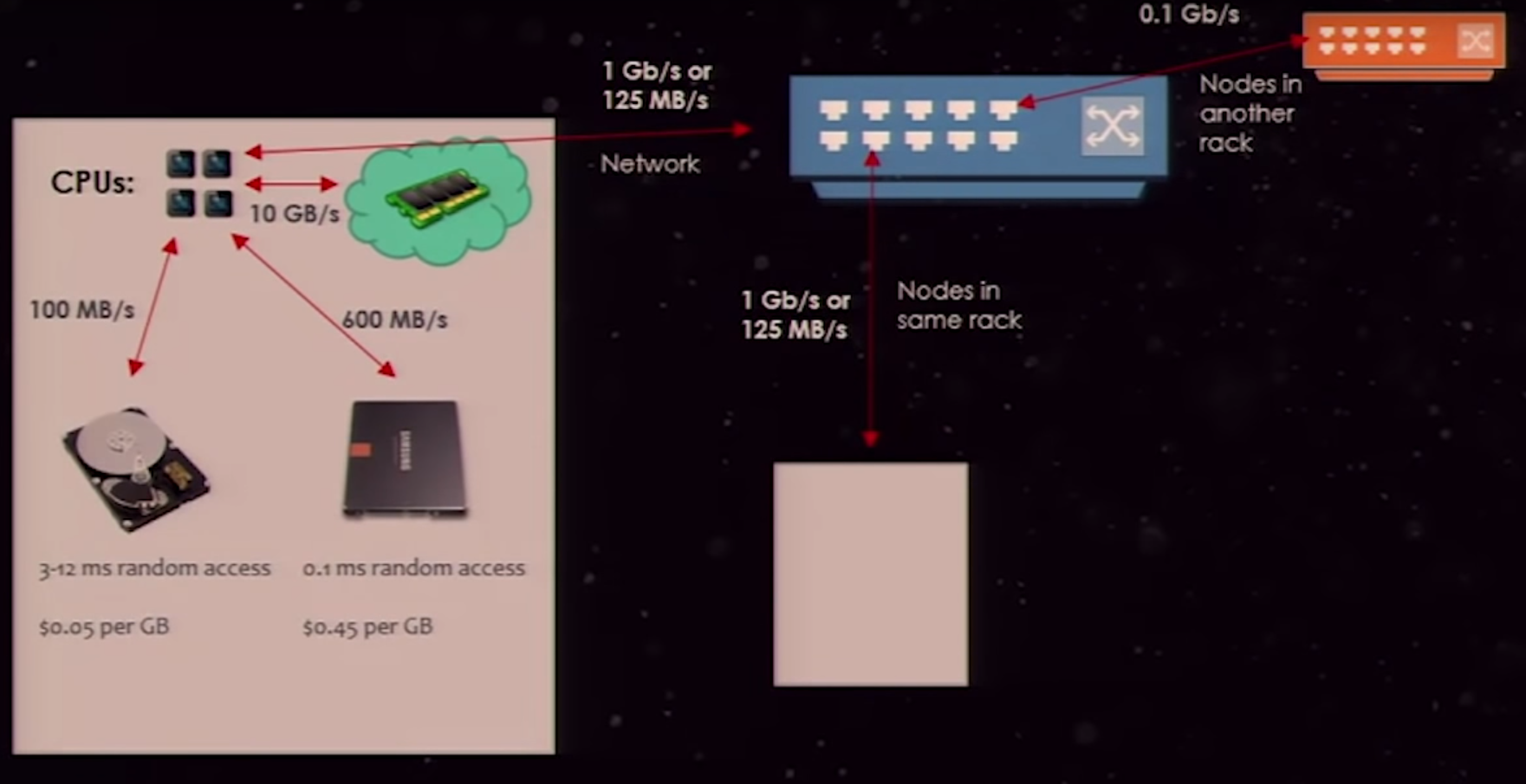

It’s worth noting that optimizing the operation of read/write data to disk or network will help achieve better performance, as those 2 tranferring media could be a bottle neck in term of hardware.

- Data movement within memory can achieve a speed of 10GBps. Spark mainly process data on memory therefore it is blazingly fast.

- Read/Write from memory disks will lower down the speed to about 100MBps and SSD will be in the range of 600MBps.

- Data travels through network will drop to about 125MBps.

Software hierarchy

Transformation (lazy)

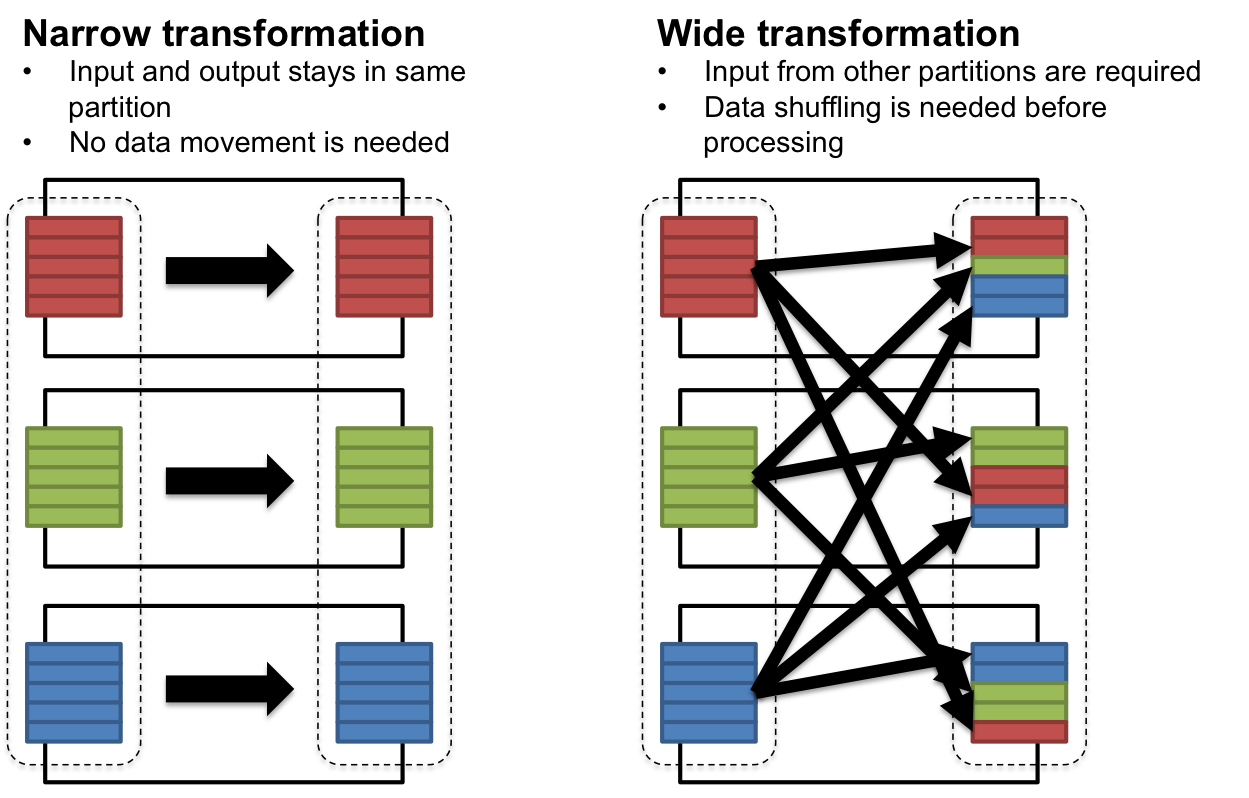

There are two types of transformation:

- Narrow: all data needed for transformation is available to an executor

- Wide: data needs to gather from other nodes (shuffle)

Action (eager)

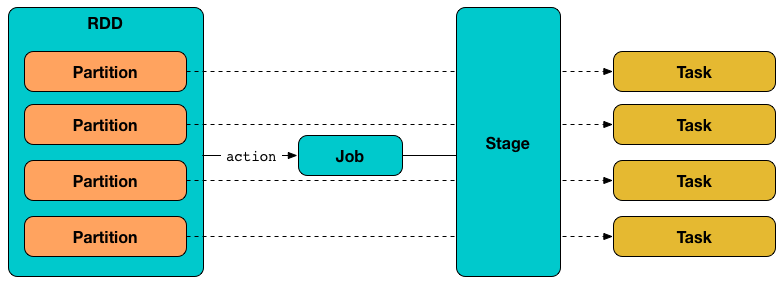

Spark defines actions as eager evaluation which triggers the DAG schedule to run. Depending on transformation type, it can launches one or more jobs.

- Jobs:

- 1 jobs can have many stages

- Stages:

- 1 stage can have many tasks

- Tasks within a stage working same function on different partition of data

- Tasks: each task consume 1 core (slot) to process a partition of data on configured RAM

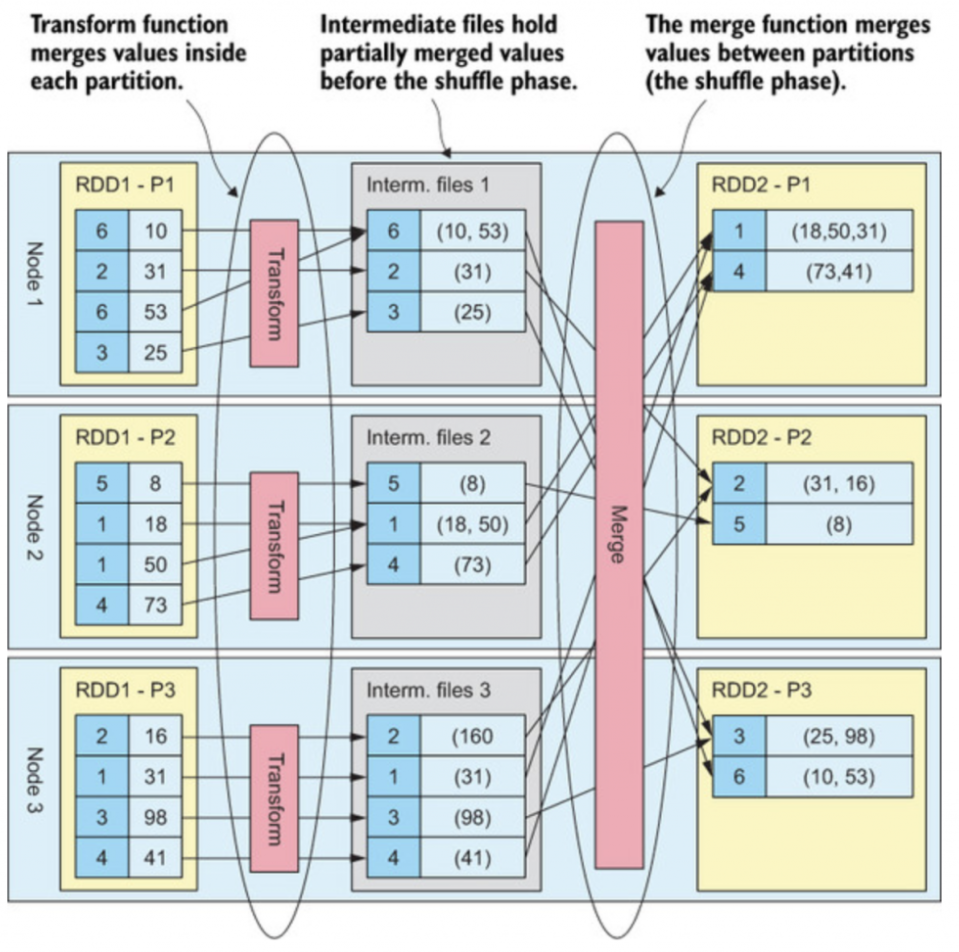

Shuffle

- Shuffle happens when a tasks required data from multiple partitions in the transformation like JOIN, GROUPBY, etc. During Shuffle, data from each partition is written into disks based on the Hash keys.

- In below example, there is a groupby action which requires two stages.

- Stage 1: groupby within by key within partition, then write to disk.

- Stage 2: pulls data from disks and gets the list of values with key.

Comments