To quickly launch spark and kafka for local development, docker would be the top choice as of its flexibily and isolated environment. This save lot of time for manually installing bunch of packages as well as conflicting issues.

Prequesite

It requires your local computer installed the following:

- Docker

- Docker Compose

- Clone below repo and start docker-compose

1

2

3clone [email protected]:vanducng/spark-kafka-docker-compose.git

cd spark-kafka-docker-compose

docker-compose up

Docker compose

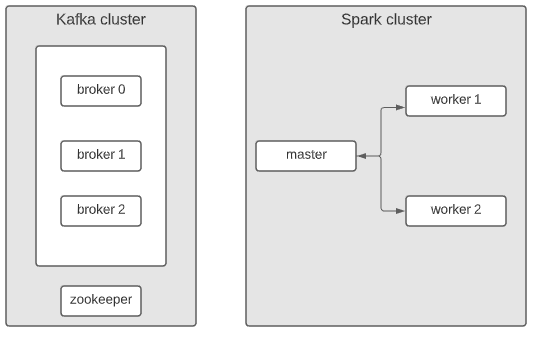

The docker-compose includes two main services Kafka and Spark simply described as below:

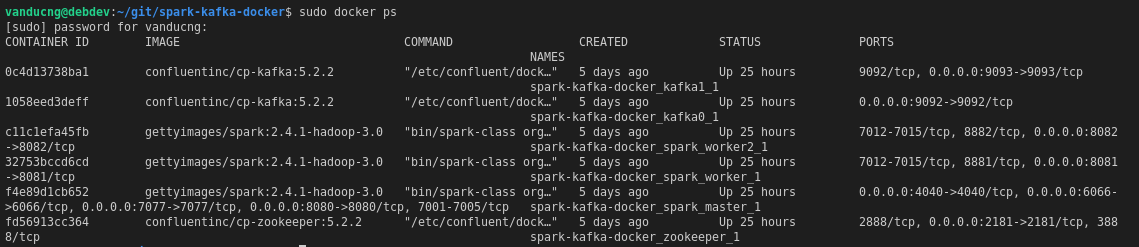

Here is the list of container after starting docker-compose

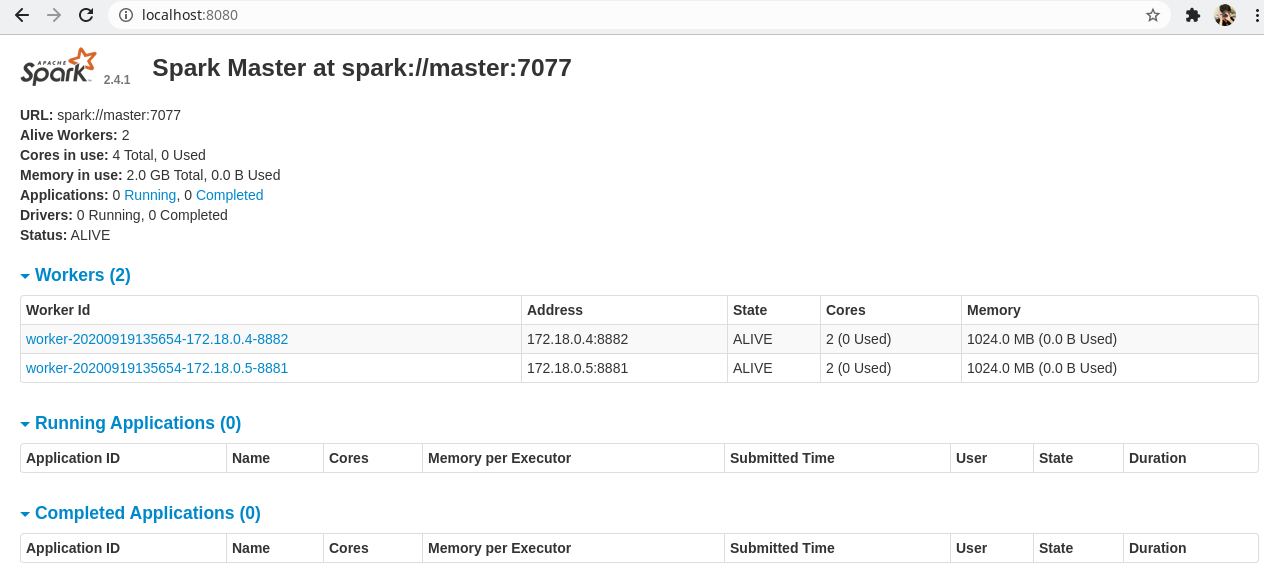

Access to spark master via http://localhost:8080

Comments